This post is a response to "How Might We Measure in the Appropriate Timeframe?" Read more of the conversation here.Many of the blog entries to date have focused on evaluation ranging from longitudinal research-based studies to system theory and the importance of designing the display of data for the greatest impact.While many of my colleagues and new friends have written important and interesting posts, I am going to attempt to simplify my short entry down to three essential tenants:

- Timing is everything.

- The time to start measuring is now.

- We all need deadlines.

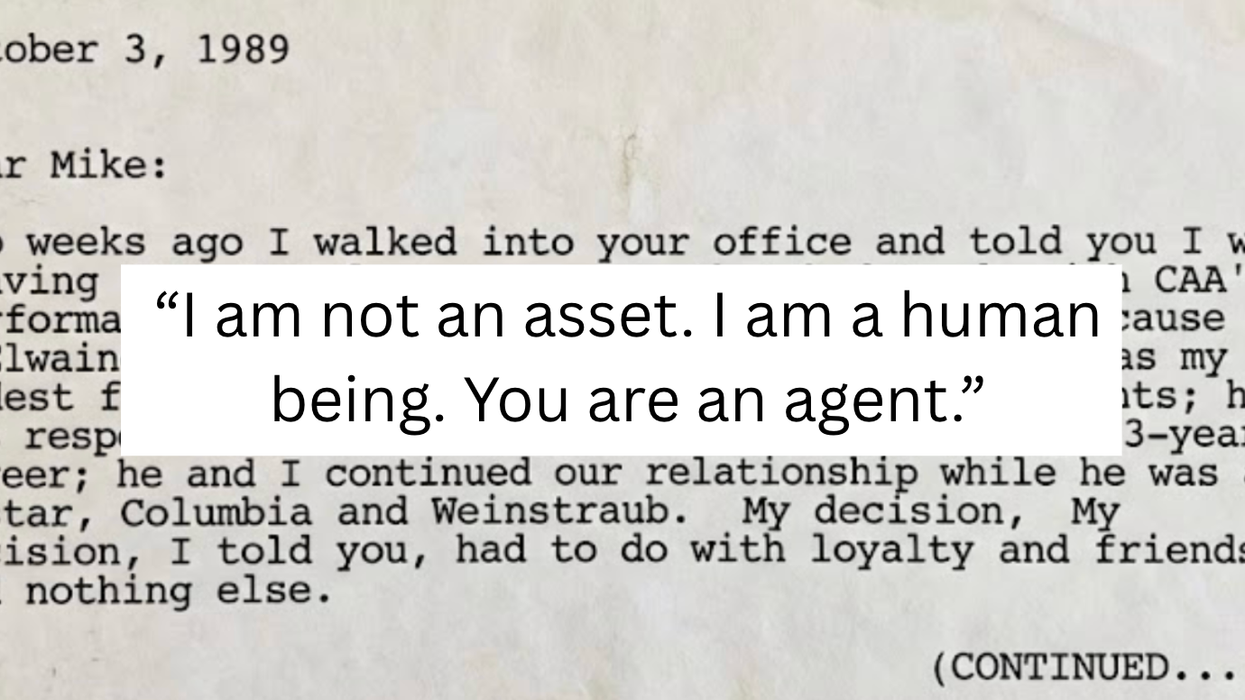

Otis knew before they did.

Otis knew before they did.