Some current college students welcome our A.I. overlords, especially when it comes to writing up term papers. ChatGPT has become a popular way for many students to cut corners when typing up essays, to the point where some barely write anything at all aside from the prompt. However, a study is showing that ChatGPT and other artificial intelligence programs aren't fooling any college professors and can expose students who are slacking off.

A published international study is showing that college professors can tell which essays are genuinely written by students versus ones that are compiled and cooked up by ChatGPT. The researchers at University of East Anglia and other universities that participated in the study analyzed 145 essays written by British university students and 145 essays written by ChatGPT with both batches writing about the same topics. What they have found is that while A.I. and large language models (L.L.M.s) wrote with proper grammar and were academically coherent, all of those essays lacked engagement techniques that are typically used by humans.

The essays written by human students were easily spotted as the average one contained nearly three times more engagement features compared to the A.I.-generated essays. These engagement techniques include personal asides, rhetorical questions, reader mentions, and persuasive language that is usually used to help back up an essay’s argument or perspective. It makes the essay feel more conversational with the reader compared to facts being “talked at” by ChatGPT. Without additional, specific prompting, A.I. is incapable of making dry subject matters and content compelling to read with a personal touch.

While A.I. will likely improve over time and the growing concern to use it to outright cheat continues to grow, university officials are more concerned about the students cheating themselves. “When students come to school, college, or university, we’re not just teaching them how to write, we’re teaching them how to think – and that’s something no algorithm can replicate,” said Prof. Ken Hyland, a co-author of the study. The worry is that if students don’t write the essays themselves, they won’t exercise critical thinking skills and abilities that could help them later in their chosen careers and, frankly, life in general.

@samharrisorg From Sam’s TED talk, “Can we build AI without losing control over it?” #SamHarris #artificialintelligence #ai #chatgpt #philosophy

While there is debate over the ethics of A.I. and the current environmental impact of its expansion, there are some valid methods to use A.I. as a tool for humans rather than a substitution for actual human work. For example, when it comes to writing essays, having an A.I. generated essay can be used as a teaching tool to help students learn and perfect persuasive writing techniques by filling in the blanks. In addition to that, they can learn how to better spot errors and incorrect information, as ChatGPT compiles information from the entire Internet and not just vetted sources.

There are some genuinely positive uses of generative A.I. that aren’t budging into creative fields. Scientists have successfully used it to map genomes in minutes instead of meticulously doing for days. It can be used as a cybersecurity tool to better detect threats. The finance sector can use it to develop stock forecasts, write up tax forms, and summarize financial data. But there is one key thing all of those A.I. applications have in common: they need to be fact-checked and analyzed by human experts.

As A.I. appears to become more common in our day to day lives, it’ll become more important to properly discern its applications, to realize the truth: it’s oversold as human replacement and undersold as a potentially valuable tool. We just have to become more imaginative in providing A.I. unimaginative applications.

This article originally appeared in June.

A labratory mouse checks out a microscopeCanva

A labratory mouse checks out a microscopeCanva

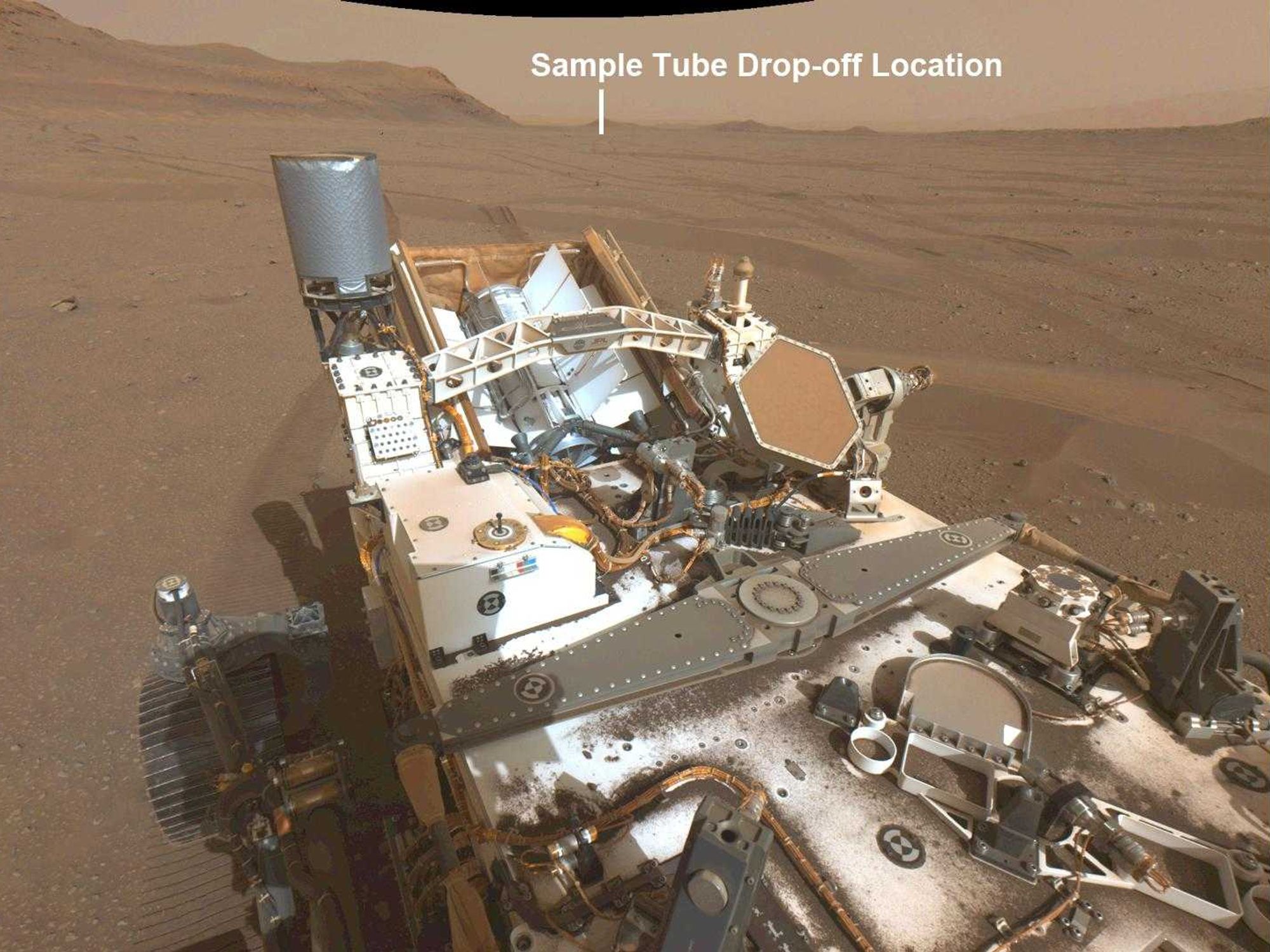

Images from Mars from NASA's Perseverance Mars rover.NASA/JPL-Caltech/

Images from Mars from NASA's Perseverance Mars rover.NASA/JPL-Caltech/  Eye surgery.Photo credit:

Eye surgery.Photo credit:

Superstructure of the Kola Superdeep Borehole, 2007

Superstructure of the Kola Superdeep Borehole, 2007

Gif of Pinocchio via

Gif of Pinocchio via

Floating gardens with solar panels. Image from

Floating gardens with solar panels. Image from  Petroleum jelly. Image from

Petroleum jelly. Image from

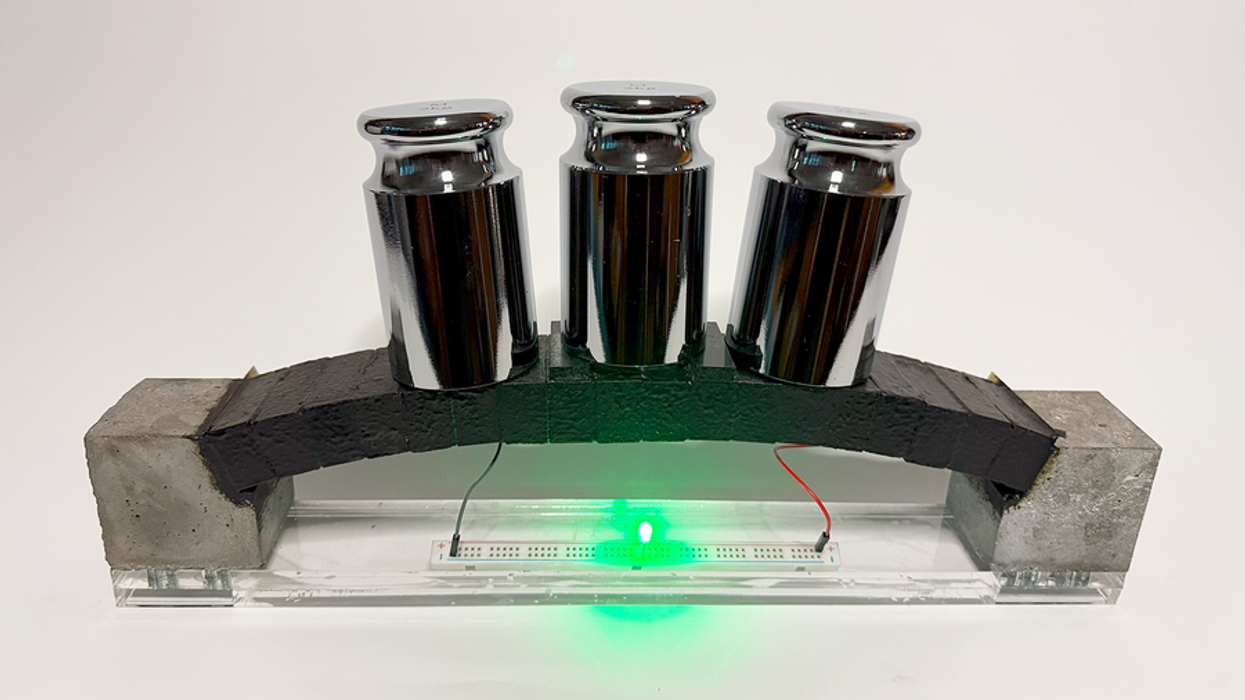

Man standing on concrete wall.Photo credit

Man standing on concrete wall.Photo credit  The Pantheon in Rome and Hong Kong at sunrise.Photo credit

The Pantheon in Rome and Hong Kong at sunrise.Photo credit  Windmills and green grass.

Windmills and green grass.  Time lapse of blue skies over a solar field.

Time lapse of blue skies over a solar field.